Using semantic similarity, Python, and a reproducible workflow – not gut feel.

Why keyword cannibalization audits break down in ecommerce

Keyword cannibalization is one of those SEO problems everyone knows exists – and almost everyone handles badly.

On ecommerce sites especially, overlap is inevitable:

- Category pages vs product pages

- Festival collections vs themed products

- Multiple products answering the same intent with slightly different framing

The usual process looks like this:

- Open 30–100 URLs

- Skim content manually

- Argue about “intent”

- Guess which page Google might prefer

- Make changes that are hard to justify later

The problem isn’t effort.

The problem is subjectivity.

I wanted a way to answer one question cleanly:

Which pages are actually competing in meaning — not just keywords?

And I wanted it to be:

- Free

- Local

- Reproducible

- Explainable

No paid SEO tools. No APIs. No eyeballing.

The constraints (intentional, not accidental)

This experiment was done on a real WordPress ecommerce site with 100+ product and category pages.

I deliberately restricted myself to:

- ❌ No paid SEO tools

- ❌ No Screaming Frog license hacks

- ❌ No cloud APIs or embeddings services

- ❌ No “trust me, this feels similar” logic

Everything runs:

- Locally

- With open-source libraries

- In a way that anyone on the team could repeat

The core idea: treat cannibalization as a similarity problem

Instead of asking:

“Which page should rank for this keyword?”

I reframed the problem as:

“Which pages are semantically similar enough that Google could treat them as the same answer?”

That shift changes everything.

Modern search engines don’t evaluate pages by keywords alone.

They evaluate meaning.

So that’s what I measured.

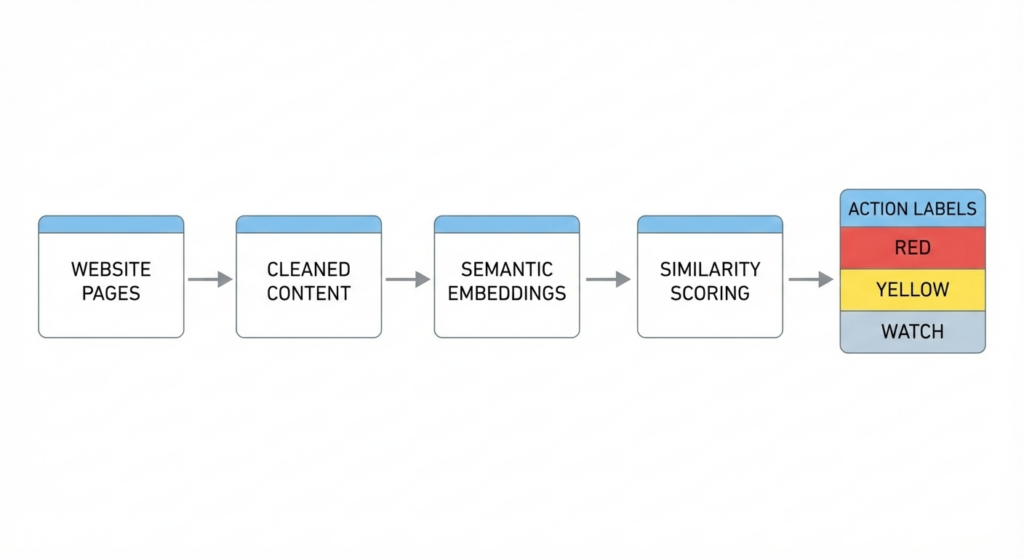

Step 1: Extract the right content (not raw HTML)

From the crawl data, I did not use raw body text as-is.

Each page was normalized into a single column called:

Final_Content

This included:

- Page title

- Primary headings

- Core descriptive content

- Product descriptions (for PDPs)

What I intentionally ignored:

- Navigation

- Boilerplate

- Cart / checkout

- Filters and pagination

The goal wasn’t cleanliness for humans – it was signal clarity for embeddings.

Step 2: Focus only on Product pages

For similarity analysis, I filtered the dataset to:

Page_Type = Product

Why?

Because:

- Product ↔ Product overlap is where cannibalization hurts most

- Category pages need different handling (more on that later)

This left me with a clean products.csv containing:

URLFinal_Content

Nothing else.

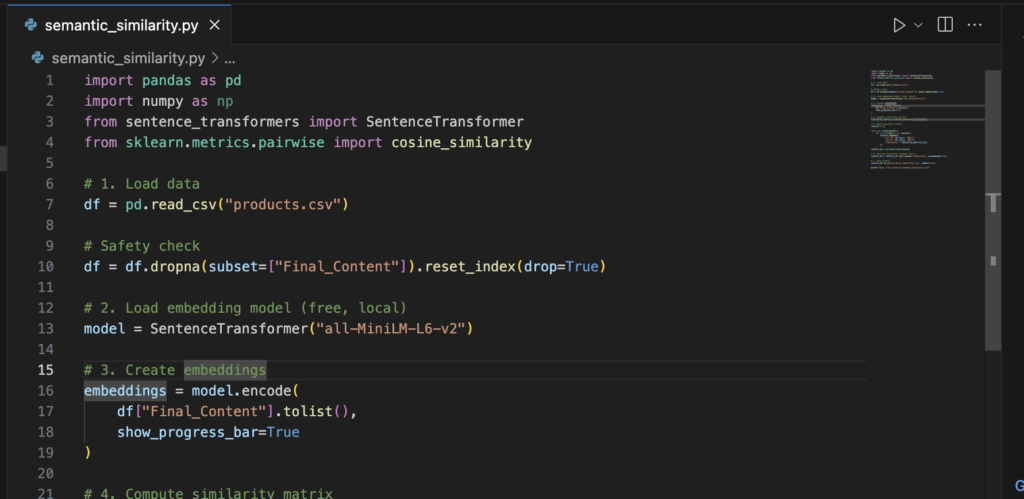

Step 3: Generate semantic embeddings (locally)

I used a free, local embedding model:

all-MiniLM-L6-v2

Why this model?

- Lightweight

- Fast

- Proven for semantic similarity tasks

- Runs fully offline once downloaded

Using Python, each product’s Final_Content was converted into a vector representation of its meaning.

No keywords.

No rules.

Just semantics.

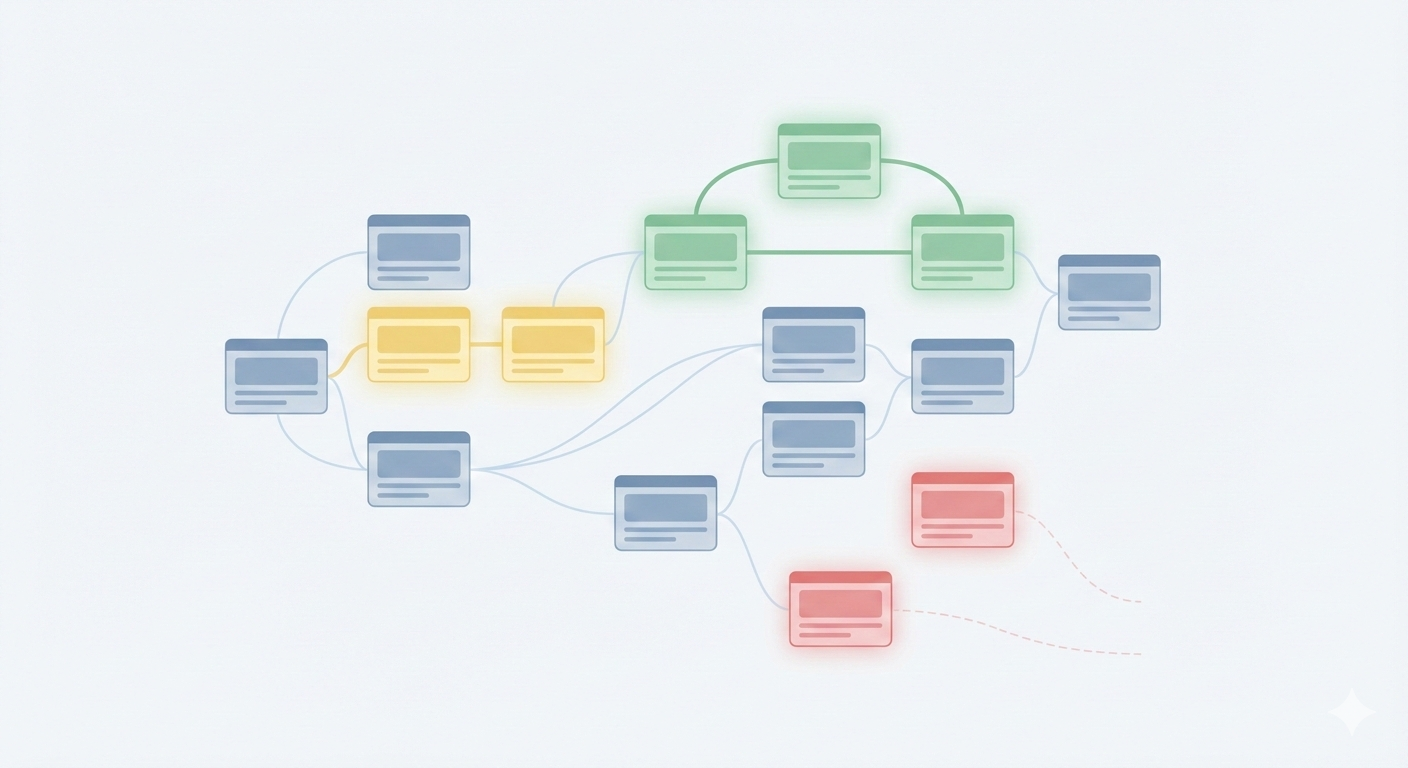

Step 4: Measure similarity between every product page

With embeddings generated, I calculated cosine similarity between every pair of product pages.

The output was a table like this:

| URL 1 | URL 2 | Similarity |

|---|---|---|

| /butterfly-themed-cake/ | /unicorn-themed-cake/ | 0.91 |

| /janmashtami-cake/ | /krishna-themed-cake/ | 0.94 |

| … | … | … |

This immediately surfaced patterns that are invisible in keyword tools.

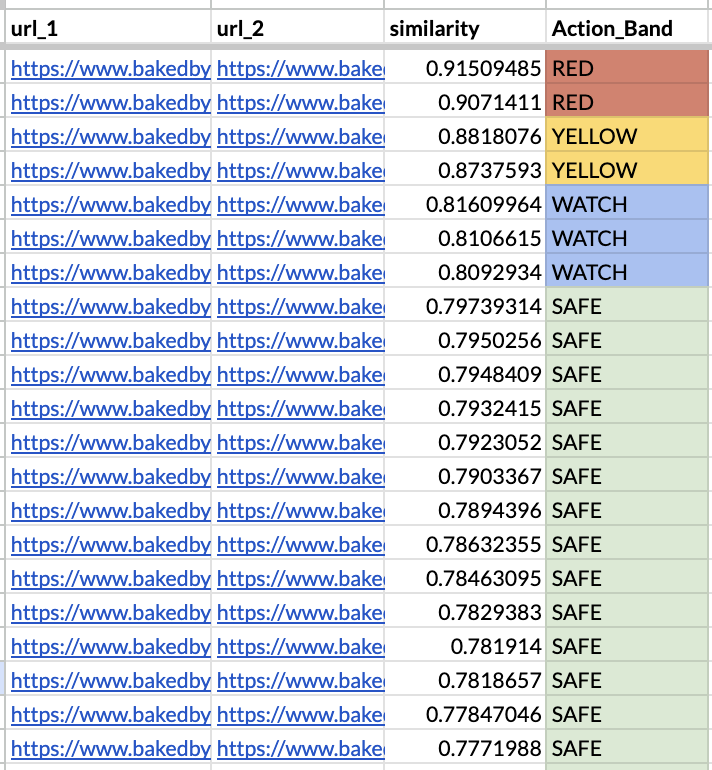

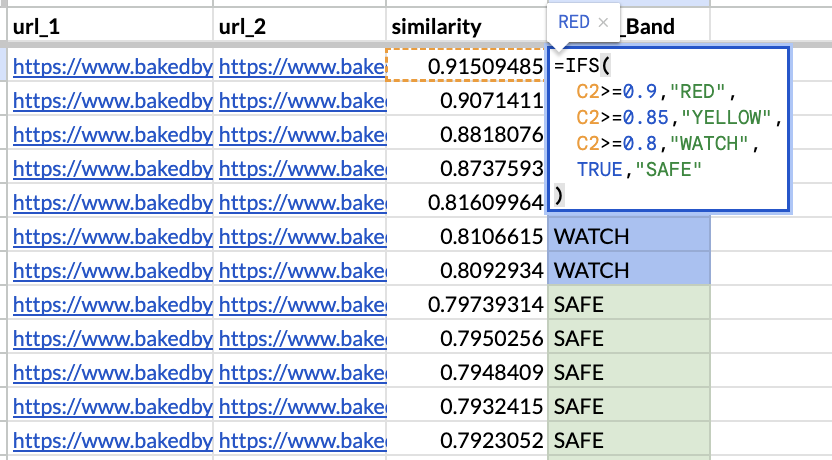

Step 5: Turn numbers into decisions (RED / YELLOW / WATCH)

Raw similarity scores aren’t useful by themselves.

So I introduced action bands inside Google Sheets.

RED ≥ 0.90

YELLOW 0.85 – 0.89

WATCH 0.80 – 0.84

SAFE < 0.80

This single step changed the entire workflow.

Instead of asking “what should we do?”, the data now said:

- 🚨 These pages are indistinguishable in intent

- ⚠️ These pages overlap but can be differentiated

- 👀 These pages are adjacent – monitor, don’t touch

No debates.

No opinions.

What the data revealed (this surprised me)

1. Category pages often compete with their own products

Examples like:

/janmashtami-cakes/

/janmashtami-cakes/janmashtami-themed-cake/

Semantic similarity: 0.90+

This isn’t a mistake – it’s a role clarity issue.

The fix wasn’t deletion or redirects.

It was:

- Category pages → act as hubs

- Product pages → act as destinations

Once that distinction is clear in content, overlap stops being harmful.

2. Not all similarity is bad

Some YELLOW overlaps were healthy.

For example:

- Anniversary cakes vs birthday cakes

- Princess cakes vs unicorn cakes

These serve adjacent but distinct emotional use-cases.

The right action wasn’t consolidation – it was intent framing, especially in the first 150–200 words.

3. WATCH pages should often be left alone

This was the hardest mindset shift.

Pages in the 0.80–0.84 range often:

- Share visual language

- Share ingredients

- Serve different buyers

Touching these prematurely would likely cause more harm than good.

Sometimes the correct SEO action is no action.

How this changed my cannibalization workflow

Before:

- Manual audits

- Endless reviews

- Subjective decisions

- Hard to justify changes later

After:

- Deterministic system

- Clear thresholds

- Explainable actions

- Easy to revisit as the site grows

Most importantly, this approach scales.

Add 50 new products?

→ Rerun the script.

→ Re-evaluate clusters.

→ Make confident decisions.

What I’d improve next time

- Cluster pages automatically (instead of pairwise review)

- Layer in internal link signals

- Track post-change impact by cluster, not page

But even in its current form, this workflow is already a massive upgrade over traditional audits.

Who this approach is for

This is especially useful if you:

- Run ecommerce or large content sites

- Struggle with category vs product overlap

- Want automation that improves decision quality, not just speed

- Are experimenting with semantic SEO workflows

Final thought

Keyword cannibalization isn’t really an SEO problem.

It’s a decision problem.

Once you stop guessing – and start measuring meaning – everything becomes calmer, clearer, and more defensible.keyword cannibalization audits

If you’re experimenting with similar workflows or thinking about automation in SEO, feel free to reach out. I’m refining this into a repeatable system and happy to exchange notes.

Conclusion

Follow me on Medium, X, and LinkedIn for more practical guides and deep dives into Python, AI, and SEO. I share fresh tips every week that can save you time and boost your results.

Got questions or ideas? Drop a comment – I love hearing from readers and sharing insights.

And don’t forget to share this post with your network if you think it’ll help them too!